Can you identify the original story author used to prompt (start off) the generated text below? The title is also generated.

After, please visit Episode 2: The Bloody Tale.

The generated text is followed by the classic story which was used as the prompt for the computer generation. For technical details see end Notes.

Character names are changed if they appear from the original text. Only paragraph breaks etc. added for clarity.

Made on my creativity app Story Live

The Distant Shout of the Great Storm

It seems that I didn’t remember much, but my memory has been erased as much as any of the others. This was really quite disorienting. I just remember feeling that “something” was happening.

“Aaah… This… It feels right to be in this place… That sounds horrible… Do I really have to die? My thoughts are starting to run away from me, and I’m beginning to feel as if something is wrong with me, and I can’t be sure I know where I am… I can’t be sure of it but… I think I’m getting close, and maybe I can keep moving forward for just a little longer… ”

With that last part, I started moving my body. After moving for a moment, it looked like I was going to try to run away, but I got stuck. There was no way that I was going to pull myself back onto my feet. I needed to get back to my feet as quickly as possible. Maybe I couldn’t run anymore.

The Count, seeing his life gone away with himself, and his brother-in-arms and his friends at the foot of the steps to the entrance of the tower, seized the rapier, and plunged it in the back of his throat; a blow of his thumb, by which the blood rolled upon the floor like a thin sheet, sent the blade through his shoulder and through his spine, and severed his heart.

“Ah, this be the death of all the good! Now be thou slain, let thy spirit go in peace, and myself be dead.”

But as he made an attempt at speech, he heard in the distance the distant shout of the great storm. He saw the pallid faces of many of the men who had fallen of his hand; and with each one raised his dead hands with their dead fingers they raised it again before the black, black, black face of the death, while he staggered away from the tower, pale-faced and pale.

Meanwhile, through the crowd of the dead, a faint flame rose up behind the white curtain. And when the red flame blazed, from the tower, on the walls, all the dead went down behind the curtain.

And now the darkness of ages flowed out from one of the steps.

“For me now to make my home in the ashes of dead men,” said an old man that night in all his glory, on his grave, “if I would. I will see me now, in the sun of a new day, as I remember my old days; as they were, to me they were; and on this grave, and with this pale face, and in these hands, as they should be in my old hands, no more, to be, and to live, in these dead men.

“This world shall be dead, as is the world that came before it, when the world came to be in man’s body.

Then the prince went alone into the black apartment—and with an irresistible vigour, without fear, without regret, came to the last step of the staircase. He went there with a quickness, that neither his feet, nor his arm, nor the arm of any of the party, made a noise in motion, and with suddenness, that neither his feet nor his face, nor his body, or the last step of the staircase, could move, and for a moment the light of the candle, which was not disturbed, glittered.

At last he was called down the stairway; and as he sat down he perceived the presence of the death, and said aloud aloud:—

“Hail! O good man! What a night—and all things that are born of a man are, in the dark hours of his day, as dead as those which lie before him: and he in the dark days of his day, though all things that are born of him should be, in the light and sunshine of his day be, as they were before him, but all things that were before him were dead, in the dark hours of his day.”

Original

The Masque of the Red Death

Edgar Allan Poe 1842

The “Red Death” had long devastated the country. No pestilence had ever been so fatal, or so hideous. Blood was its Avatar and its seal—the redness and the horror of blood. There were sharp pains, and sudden dizziness, and then profuse bleeding at the pores, with dissolution. The scarlet stains upon the body and especially upon the face of the victim, were the pest ban which shut him out from the aid and from the sympathy of his fellow-men. And the whole seizure, progress and termination of the disease, were the incidents of half an hour.

But the Prince Prospero was happy and dauntless and sagacious. When his dominions were half depopulated, he summoned to his presence a thousand hale and light-hearted friends from among the knights and dames of his court, and with these retired to the deep seclusion of one of his castellated abbeys. This was an extensive and magnificent structure, the creation of the prince’s own eccentric yet august taste. A strong and lofty wall girdled it in. This wall had gates of iron. The courtiers, having entered, brought furnaces and massy hammers and welded the bolts. They resolved to leave means neither of ingress nor egress to the sudden impulses of despair or of frenzy from within. The abbey was amply provisioned. With such precautions the courtiers might bid defiance to contagion. The external world could take care of itself. In the meantime it was folly to grieve, or to think. The prince had provided all the appliances of pleasure. There were buffoons, there were improvisatori, there were ballet-dancers, there were musicians, there was Beauty, there was wine. All these and security were within. Without was the “Red Death”.

It was towards the close of the fifth or sixth month of his seclusion, and while the pestilence raged most furiously abroad, that the Prince Prospero entertained his thousand friends at a masked ball of the most unusual magnificence.

Notes

Prompt was 10-20 words from the original. GPT-2 system by Fabrice Bellard – see the site below for credits etc.

Made on my creativity app Story Live – please visit.

Text from Gutenburg free classic ebooks

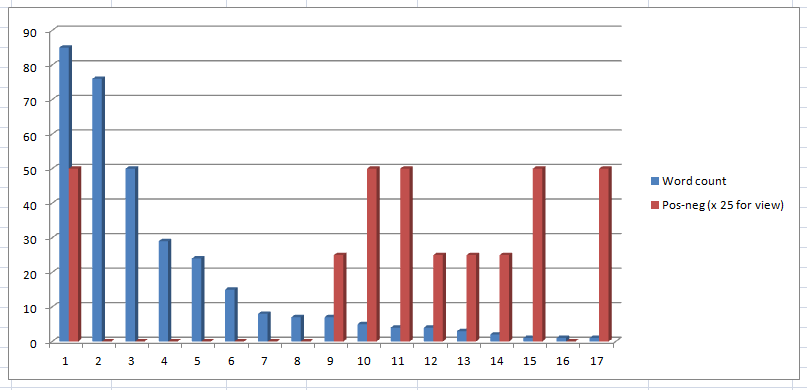

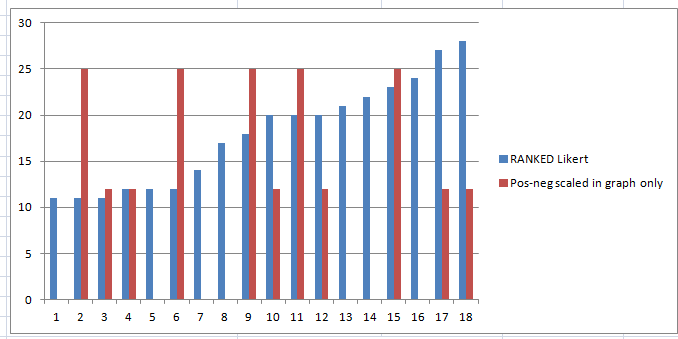

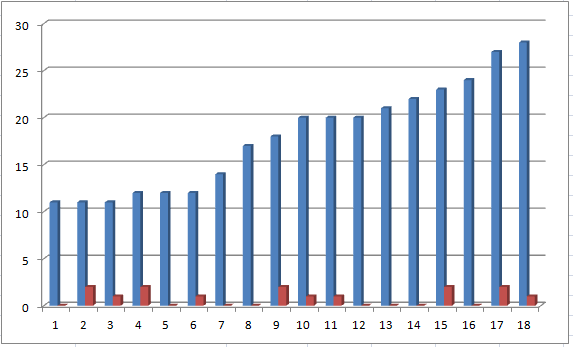

Original 323 words from 2417. Generated is 712 words.

A certain amount of cherry-picking of the most grammatical and interesting generated parts, but no actual editing (moving around of words or new words). Identifying names are changed.

More of these will be posted, see the index.