Index for AI research blogs / Geoff Davis UAL CCI London

2023

Please visit Geoff Davis Mood Bias & Happy Ending Syndrome page

2022

AI text and writing research, which you might have been involved in as a writer, is now online at UAL Research at:

https://ualresearchonline.arts.ac.uk/id/eprint/18621/

(Summer 2020, using my app with Fabrice Bellard’s Text Synth, see Story Live with GPT-J 6B, NeoX 20B etc.),

I am doing research into computer text generation, specifically machine learning and text generation, in a field generally known as AI or artificial intelligence, at University of London CCI Camberwell London. My research supervisor is Professor Mick Grierson (see credits at bottom). I’m also making other practice-based artworks, these are currently experiments only.

I previously programmed story generators on a Sinclair ZX Spectrum, in the days of home microcomputers. The first computer poems were generated in 1953. So literature and computing has a long history.

2020 research: what happens when writers use a computer text generator to help write various types of articles? What are their experiences when doing this hybrid activity? This is open-ended (no hypothesis, grounded research) and multi-modal.

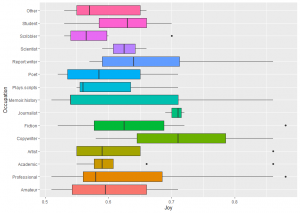

Apart from the practicalities of using a text generator, I also addressed plagiarism and ‘fake news‘ (see below), and examined how the writer’s occupation (poet, copywriter) affected response.

Study 1 – August 2020

OpenAI’s GPT-2 was used for research into how professional writers use text generation.

Results

The study had three text generation and editing experiments, and included many feedback questions.

Nine out of ten of these writers (89%) had never used a text generator before. It is easy when working in the computer domain to assume everyone knows about technological advances, but they do not, even in their own field.

The system I devised (with Fabrice Bellard) for the study is now live for everyone at Story Live (new page).

Future work

I am now working with OpenAI’s latest GPT-3, and the OS systems like GPT-J and NeoX.

Links

The report will be published soon. Some have been on the Blog but these are now private.

2022

I am editing an anthology of creative writing with AI – a loose concept. This will be published later in the year by Leopard Print Publishing with Story Software Ltd UK.

The study had writing experiments which used an ambiguous image prompt (see below).

FAKE NEWS

For more information on ‘fake news’ research

Please visit Xinyi Zhou‘s work here.

These are the most recent papers on fake news:

https://dl.acm.org/doi/10.1145/3395046

https://dl.acm.org/doi/10.1145/3377478

Credits

Origin

This study was devised and the study site programmed by Geoff Davis for post-graduate research at University of London UAL CCI 2019-2020. Research supervisor is Professor Mick Grierson.

Text Synth

Now live for everyone at Story Live (new page).

Geoff Davis:

A publicly available text generator was used in the study experiments, as this is the sort of system people might use outside of the study.

It was also not practical to recreate (program, train, fine-tune, host) a large scale text generation system for this usability pre-study.

Fabrice Bellard, coder of Text Synth:

Text Synth is build using the GPT-2 language model released by Google OpenAI. It is a neural network of 1.5 billion parameters based on the Transformer architecture.

GPT-2 was trained to predict the next word on a large database of 40 GB of internet texts. Thanks to myriad web writers for the training data and OpenAI for providing their GPT-2 model.

Permission was granted to use Text Synth in the study by Fabrice Bellard July 7 2020.

See bellard.org

GPT-2

Visit OpenAI’s blog for more information on Google’s OpenAI text generation.

Image prompt man and dog photo

The image is from public sources under free license, it is an old image. See Man and Dog blog.

Copyright

All material on this website is copyright Geoff Davis London 2021.